RAPTURE

RAPTURE: Advancing Smart Technology through Data and AI Supremacy

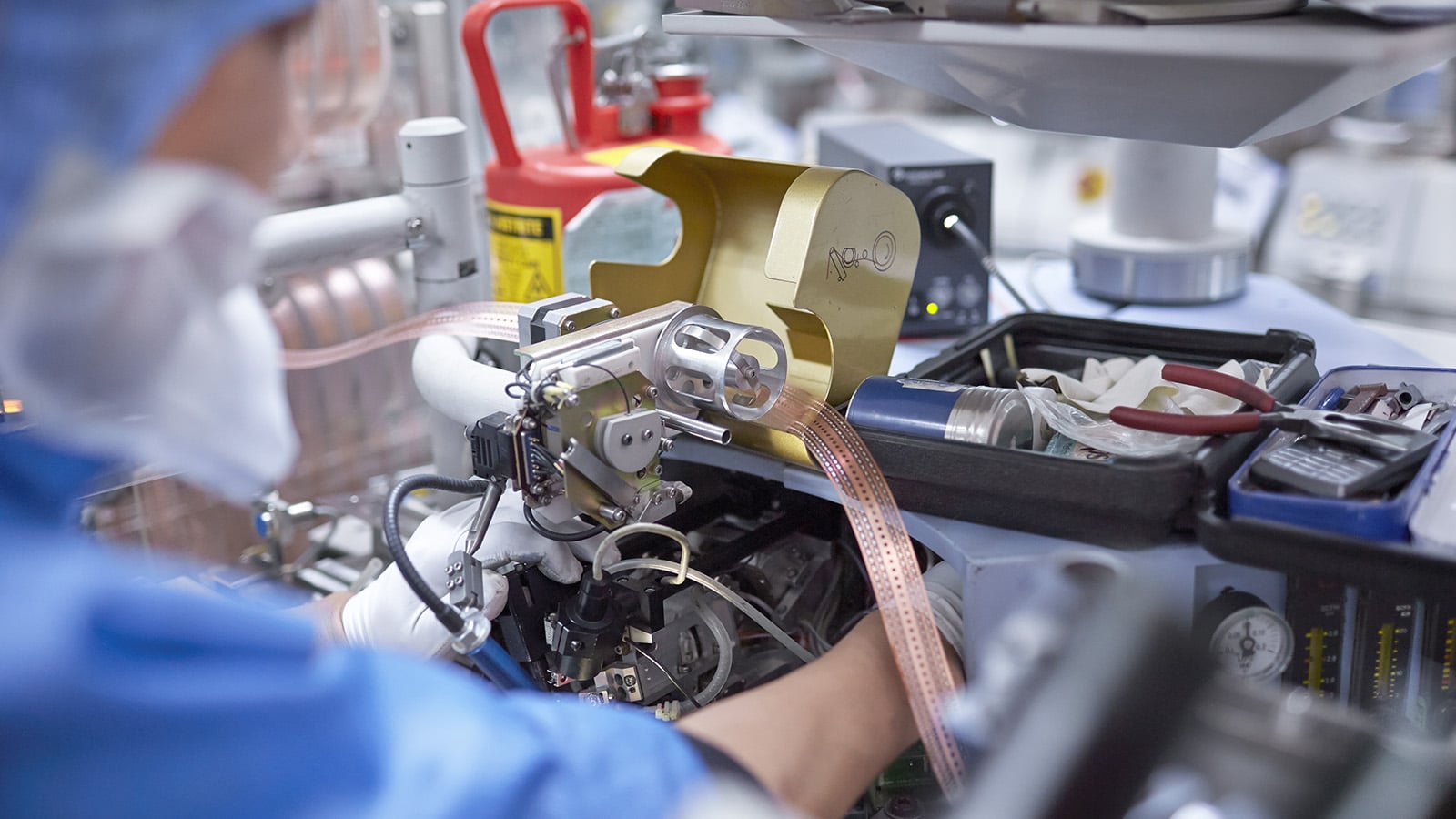

The RAPTURE project, initiated in collaboration with NXP and launching in October 2023, is set to redefine the future of semiconductor technology and smart infrastructures. The project brings together the Jheronimus Academy of Data Science (JADS), NXP, and academic partners such as Tilburg University and Eindhoven University of Technology, aiming to push the boundaries of data-driven innovation in high-performance computing.

RAPTURE focuses on developing cutting-edge techniques to ensure that NXP’s AI-driven infrastructure and data pipelines work seamlessly with its organizational structure, driving measurable performance improvements. The project’s goal is to achieve “Data & AI Supremacy,” where every element—from machine learning operations (MLOps) to data-lakes—works in perfect synergy, empowering NXP to maintain its competitive edge in the highly complex semiconductor market.

To address the challenges faced by centralized data systems, RAPTURE will explore advanced methodologies such as Chaos Engineering and AI-driven quality control automation. These approaches will not only enhance the reliability and scalability of data sharing across decentralized teams but also reduce the environmental impact of high-performance computing.

Running over the next three years, RAPTURE will offer significant contributions to both the academic community and the semiconductor industry. Insights and advancements from the project will be shared through academic publications, professional articles, and integrated into educational programs, paving the way for a smarter, more sustainable technological future.

Successful stories

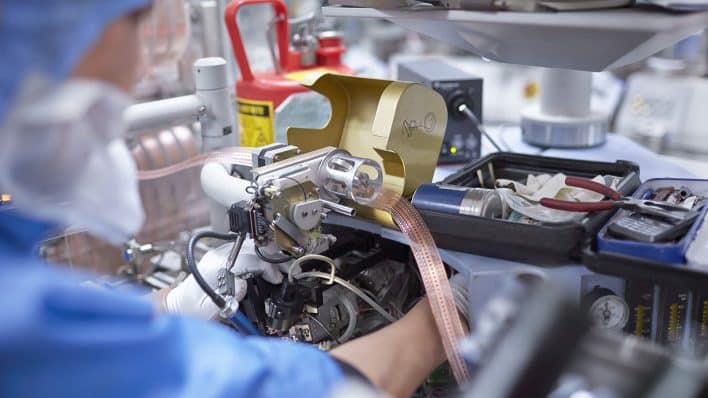

As part of Fernando’s EngD research project, a significant contribution was made to the field of HPC through the development of Job Prophet (JP), an innovative AI-powered tool designed to enhance memory allocation. Addressing the complex challenge of efficient memory management in large-scale HPC infrastructures, the EngD candidate applied Action Design Research and CRISP-DM to identify and implement best practices and intelligent automation strategies. By leveraging real-world HPC workload data and embedding continuous training, inference, and monitoring through robust CI/CD pipelines, JP demonstrated the ability to dynamically adapt to changing workloads while improving operational efficiency. The project not only delivered measurable gains in resource utilisation and job performance but also showcased the value of AI-driven decision-making in complex, data-intensive computing environments. This successful case exemplifies how rigorous research and applied engineering can converge to solve real-world challenges in HPC system optimisation.

In Pradheepan’s EngD project, a large language model (LLM)-based chatbot was developed to enhance user support within the NXP Design Infrastructure (NxDI), a critical platform used for managing semiconductor design simulations and workflows. The chatbot was created to intelligently handle high volumes of repetitive and routine support queries, which traditionally consumed significant time and resources from the engineering support team. By automating these interactions, the chatbot enabled faster response times and improved efficiency, allowing support staff to focus on complex, high-value tasks. The project employed cutting-edge natural language processing techniques to ensure accurate, context-aware responses tailored to the unique requirements of semiconductor design. Through continuous refinement based on real user feedback and integration within NxDI’s operational environment, the chatbot evolved into a reliable digital assistant that significantly improved the user experience. This successful implementation illustrates how LLM technologies can be harnessed within specialised engineering domains to drive operational excellence and innovation

PhD Projects

Stefano Fossati’s PhD project focuses on improving HPC infrastructure observability and management through advanced visualisation techniques. The research aims to align architectural intent with operational reality, enhancing monitoring capabilities and increasing infrastructure efficiency. By providing visibility on resource utilisation, we help organisations to improve efficiency and streamline operations, while enabling deeper insights into system behaviour and supporting improved management and optimisation. The project also emphasises failure analysis of HPC systems. By delivering robust mechanisms for performance inspection and issue diagnosis, it aims to improve the reliability and resilience of complex infrastructures.

Marco Tonnarelli’s PhD project is situated within the evolving landscape of modern, data product-oriented, decentralized big data architectures. The objective of his project is to develop a framework for automated policy-as-code enforcement within federated, computationally governed organizations. The research is organized across three interconnected layers: governance, architecture, and code. By identifying and analysing the key organizational challenges that arise during transitions to such architectures, Marco aims to explore architectural patterns from cutting-edge metadata management, an essential component in these systems, and ultimately design an automated framework for federated policy enforcement, leveraging Natural Language Processing (NLP) technologies. Methodologically, the project combines primary and secondary approaches from empirical software engineering. Due to the limited academic coverage of this emerging topic, industry insights are essential. The research currently includes a multivocal systematic literature review and a qualitative study, with plans to incorporate mining studies, ethnographic methods, case studies, and action research as it progresses.

Publications and Other Appointments

- ‘Let it be Chaos in the Plumbing!’ Usage and Efficacy of Chaos Engineering in DevOps Pipelines. Accepted at the 41st International Conference on Software Maintenance and Evolution (ICSME) 2025, Research Track.

- Data Catalog Tools: A Systematic Multivocal Literature Review. Accepted at The Journal of Systems and Software (Jul 2025).

- Enhanced Infrastructure Maintenance and Evolution Through Graph-based Visualization and Analysis. Submitted at 41st International Conference on Software Maintenance and Evolution (ICSME) 2025, PhD Symposium.

- Chaos Engineering Evolution

- Defining Data Domains in Decentralized Data Architectures: A Dynamic Managerial Capabilities Perspective. Submitting at Transactions of Engineering Management.

- Engineering AIOps Controllers for High-performance Software Operations: an Action Design Research Study. Under Review at IEEE Transactions of Cloud Computing, submitted on March 2025.

- AI Assisted Chip Development Operations: an Industrial Case Study in the Semiconductor Field. Working paper.

- Large Language Models for NextGen Infrastructure as Code: Opportunities and Challenges. Working paper.

- Organising the 2025 POA Summit @ University of Stuttgart. September 18-19, 2025.